Lately, I started using Notion for note-taking, to-do lists, and now for writing blog posts. Notion makes it simple to combine all those activities in a unified pretty interface. Using Jekyll, Github Pages, and Github Actions, I was able to import my Notion Blog database posts into Jekyll using a Github workflow that runs twice a day.

What is a Notion Database?

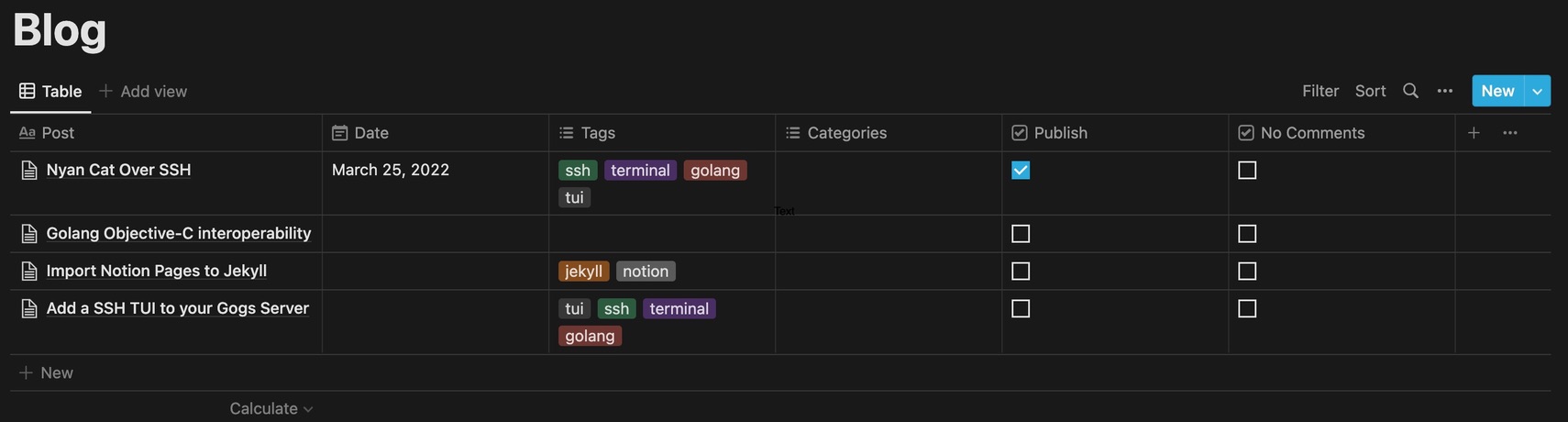

Notion databases are smart tables that can hold a collection of pages with customizable properties and multiple layouts. Think of it as smart spreadsheets on steroids.

The Layout

Jekyll uses YAML front matter to specify post title, date, tags, and other properties. Using Notion databases properties, we can map Jekyll YAML front matter into a simple Notion Database. Each entry in the database corresponds to a Jekyll post with its respective front matter properties. The script will use the date specified as the published date for the post, it will default to the entry creation date if one was not specified. If you want a page to be published, simply check the “Publish” checkbox.

The Script

Writing the importer script was a breeze using Notion JavaScript SDK and souvikinator/notion-to-md. The SDK pulls the entries in the database, then the converter converts each Notion page into Markdown ready to be used in Jekyll.

To use the script, first, you have to create a new Notion integration that can access your Blog page database. Simply go to settings, choose integrations, click on “develop your own integration” and create a new integration with the proper scopes.

After creating that, you will get an integration key that would be used later with the script for it to work. But before that, you will need to invite your newly created integration bot to the Notion page that has the database that you want to use. Simply click the “share” button on the database page and invite the integration you just created. It will have the same name you specified when you created the integration.

This is how the script works:

// Create a Notion client from an environment variable

const notion = new Client({

auth: process.env.NOTION_TOKEN,

});

// Query the database and filter out unpublished entries

const response = await notion.databases.query({

database_id: process.env.DATABASE_ID,

filter: {

property: "Publish",

checkbox: {

equals: true

}

}

})

// Iterate over the results

for (const r of response.results) {

// build the post front matter

// convert the page to markdown

// write it to disk

}

Here, we’re using 2 environment variables to store the Notion integration token and the database ID. You can find the database ID in the Notion page URL. https://www.notion.so/<database_id>?v=<long_hash>

You can find the importer script here.

Periodically Import Content

Using Github Actions, we can create a workflow that runs periodically every hour to run the script and then publish the contents using Github Pages. This depends on your setup, for me, I’m hosting my website on Github Pages just because of its convenience.

The workflow runs the script, then commits all the changes back to the repository. This then triggers Github Pages to create a deployment of the website and publish the changes. Here is the workflow I’m currently using:

name: Jekyll importer

on:

push:

schedule:

- cron: "0 */1 * * *"

jobs:

importer:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@master

- uses: actions/setup-node@v2

with:

node-version: "17"

- run: npm install

- run: node _scripts/notion-import.js

env:

NOTION_TOKEN: $

DATABASE_ID: $

- uses: stefanzweifel/git-auto-commit-action@v4

env:

GITHUB_TOKEN: $

with:

commit_message: Update Importer posts

branch: master

commit_user_name: importer-bot 🤖

commit_user_email: actions@github.com

commit_author: importer-bot 🤖 <actions@github.com>

Here, I’m running the workflow every hour and using Github Secrets to store the Notion token and database ID, then use those as environment variables when running the script.

That’s it for now! Go on and write your next post!

Comments